GPT-3 x Healthcare: Democratizing AI

Get Out-Of-Pocket in your email

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveIntro to Revenue Cycle Management: Fundamentals for Digital Health

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

The reason I became a thinkboi is because when the computers come for all of your jobs I will be safe. It turns out I’m probably wrong.

If you were even on the fringes of tech twitter, there’s no doubt you saw examples using openAI’s new GPT-3 language model, likely the most advanced natural language processing algorithm ever. It understands what you’re saying, and can actually have an intelligible conversation with you or translate your sentence into other applications.

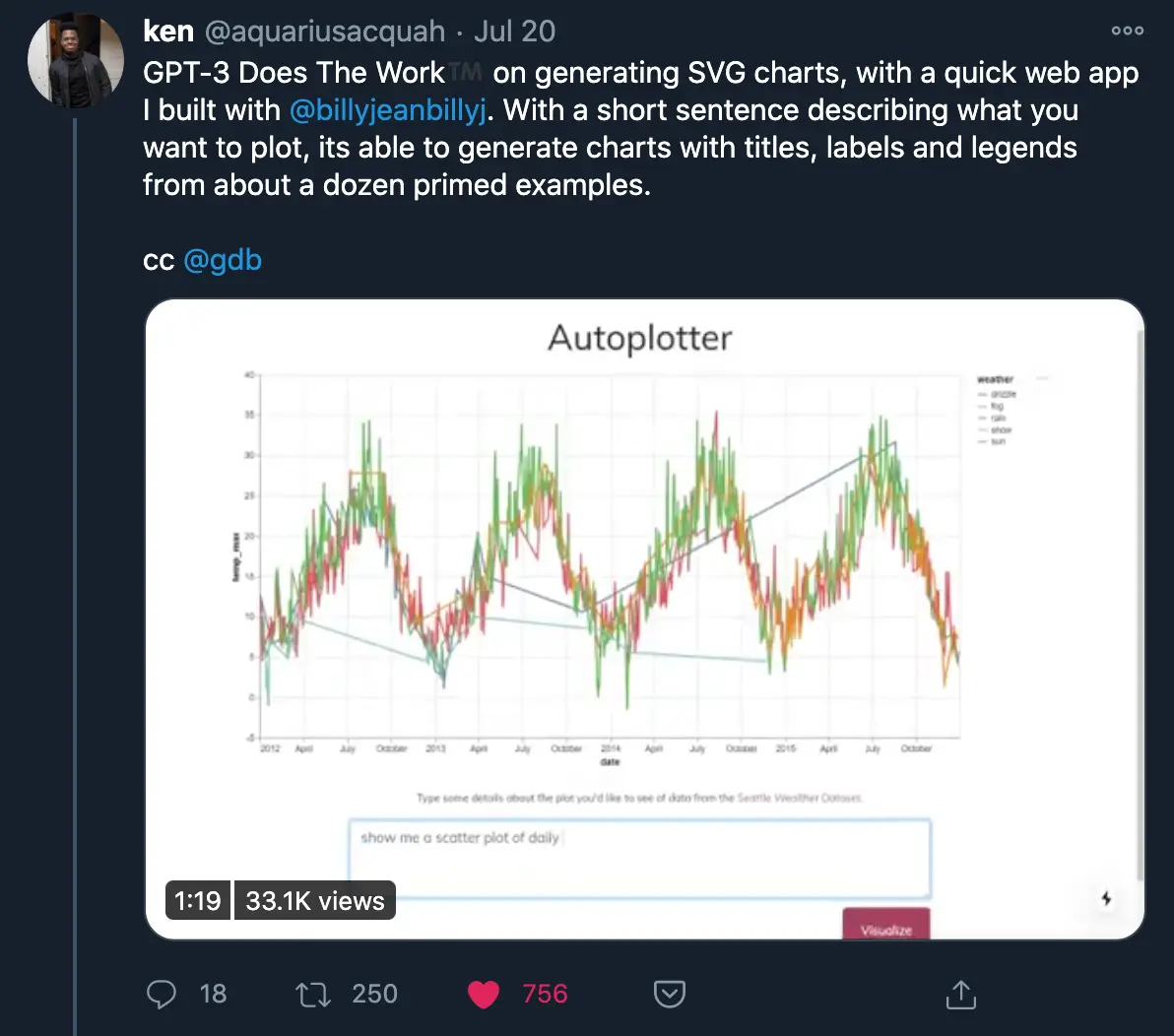

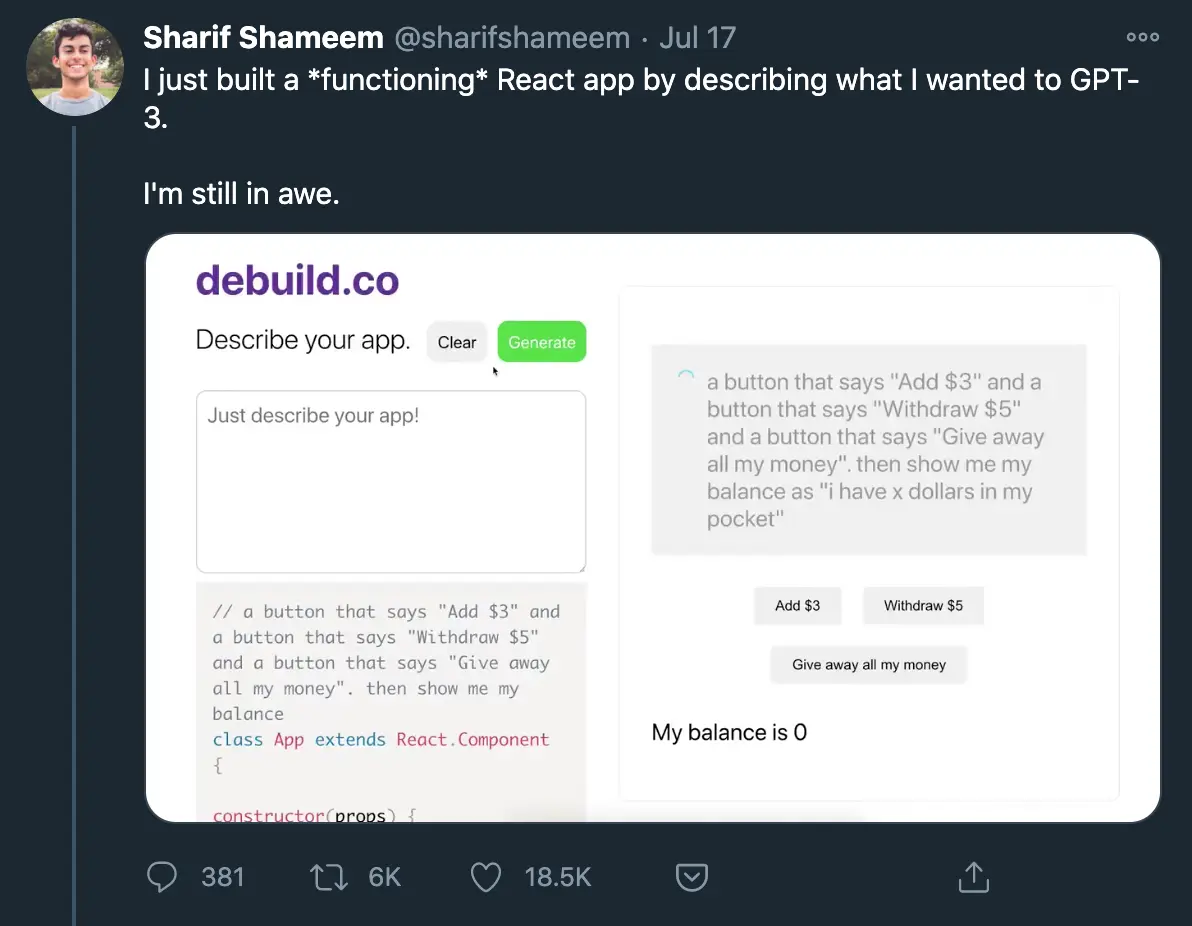

People were building some wild projects, you can see a whole thread of them here. I thought this one in particular was very cool.

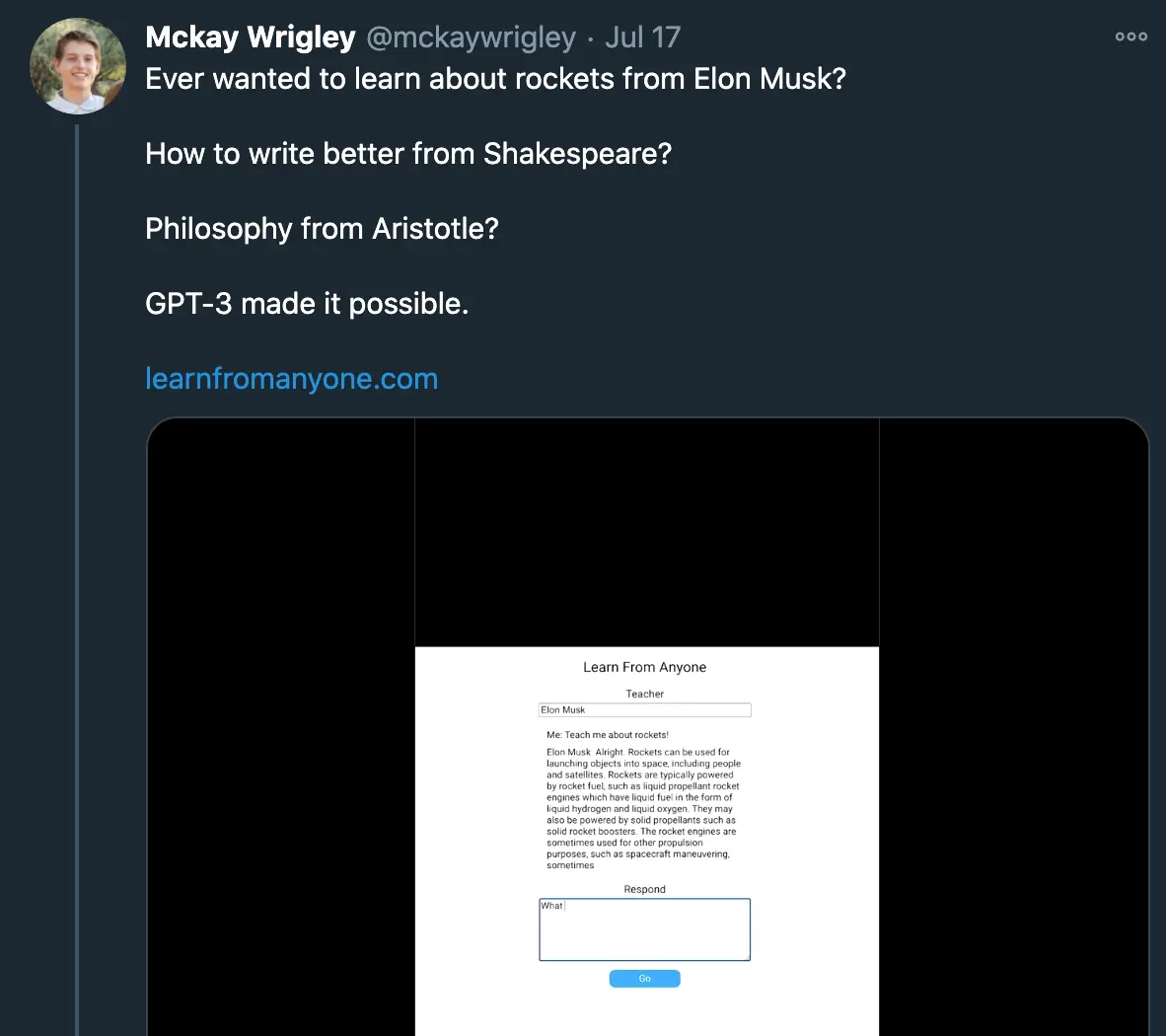

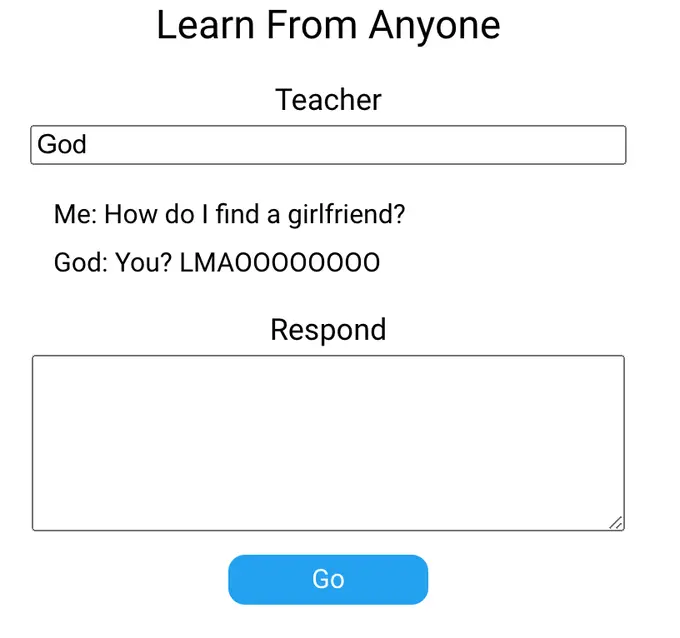

Then this demo built an application using GPT-3 that could teach you about different topics.

But I knew it was advanced when I gave it a spin myself.

How GPT-3 works and its limitations

I’m not gonna sit here and pretend like I’m actually an expert here or even that I have firm grasp on GPT-3. But here’s my understanding of the model based on what’s been written by actual experts and synthesized + talking to people.

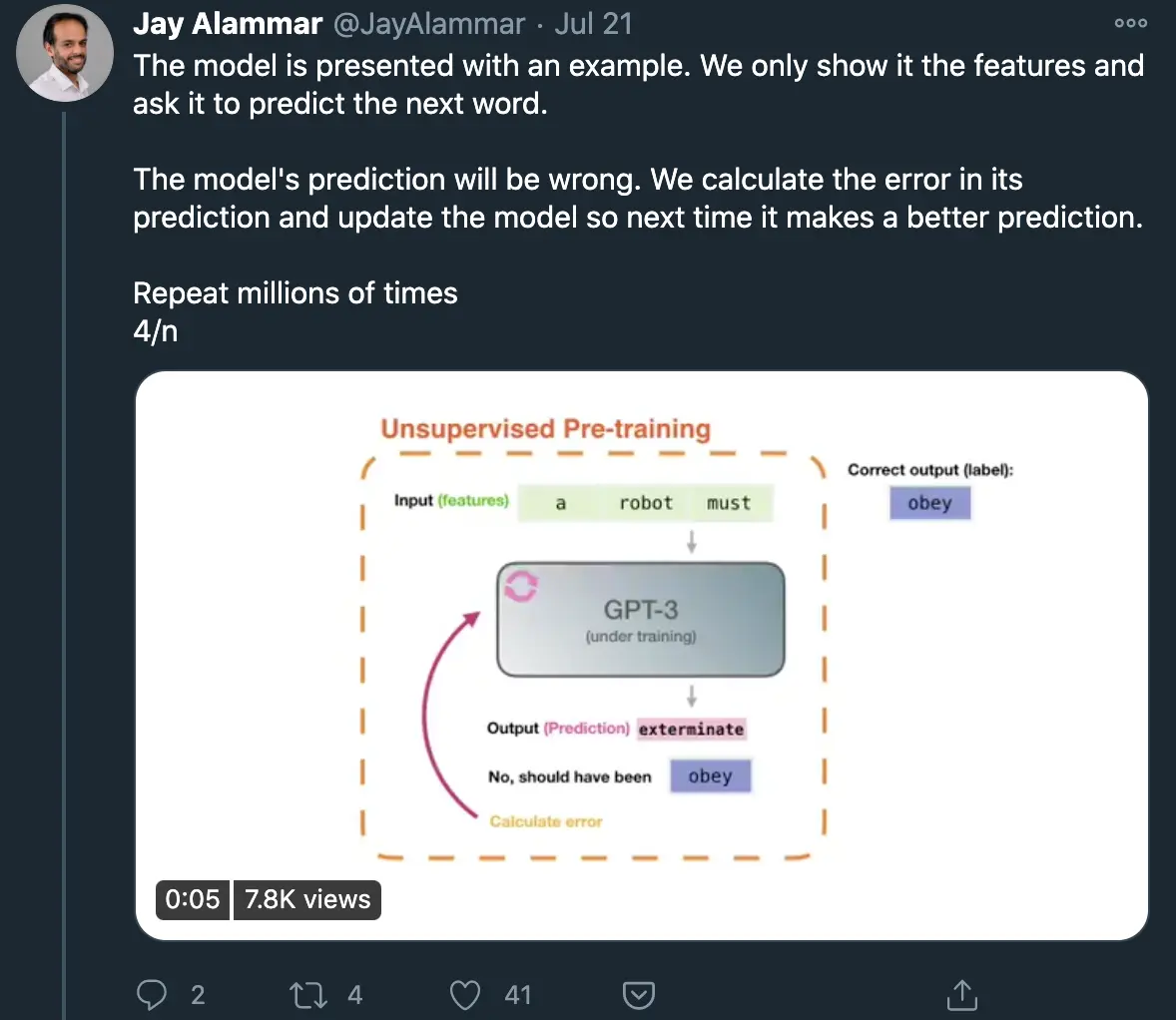

This thread does a good job explaining with visuals and this article goes a bit more under the hood, but the gist is that GPT-3 has trained on text from effectively the entire internet (300B tokens of text), and evaluated 175B parameters to guess what the next word should be. It’s essentially the most highly generalized model of how language works that can be applied to many areas (including translating language to other mediums like code, numbers, etc.)

The big things with GPT-3 vs. GPT-2 and why it’s a big deal generally:

- The general architecture has not changed, only the number of parameters, so now the model performs really well across a bunch of different use cases. In theory this means that by starting from this same model, you can prime it to whatever use case you want by exposing it to your own data, which is something any company can do regardless of AI expertise.

- To that end, it’s quite easy to teach it new things with just a small amount of input data. Take the model, think about your use case, give a few examples of that use case, and voila it actually works quite well.

- It’s an API! A pre-trained model that’s this sophisticated is literally a few lines of code away from being used in a new application. That’s why we’ve seen this explosion of creativity around different use cases for it already. This is a glimpse into the true democratization of AI tooling, which means you need people that understand the use cases/ability to train model vs. build the model itself.

If you were to look only at Twitter, you’d only see the cherry picked demos that worked well. But even though the model can produce very human-like and sensible paragraphs, it’s not perfect. The gist I seem to get from reading blogs like Kevin Lacker, Max Woolf, and Gwern is that some GPT-3’s key failures are around:

- It tries to force an answer that seems plausible even if it actually doesn’t know (but I mean I have this problem too so…)

- It is wrong a not insignificant part of the time (aren’t we all?)

- It has limited short-term memory and can’t string several points of logic together (wait I also have this problem)

- It’s bad at doing math and arithmetic (…uh oh)

Also because certain corners of the internet are racist and sexist, the model seems to have picked up part of those too, but because that didn’t fall into my joke scheme above (thankfully) I’m making it a separate but important line.

GPT-3 x Healthcare

The uninspired healthcare futurists will likely look at this and think to themselves “chatbots are the future of healthcare, here is proof”. But to be honest, I don’t see this going anywhere diagnostic soon with the failure rate it seems to have.

Which then makes me wonder what are areas in healthcare that have low downside risk, are text heavy, take an unnecessarily long amount of time, and are usually one or more prompts/questions that don’t need to be connected to be answered. In general it seems like the model is much better at explanations than deductions.

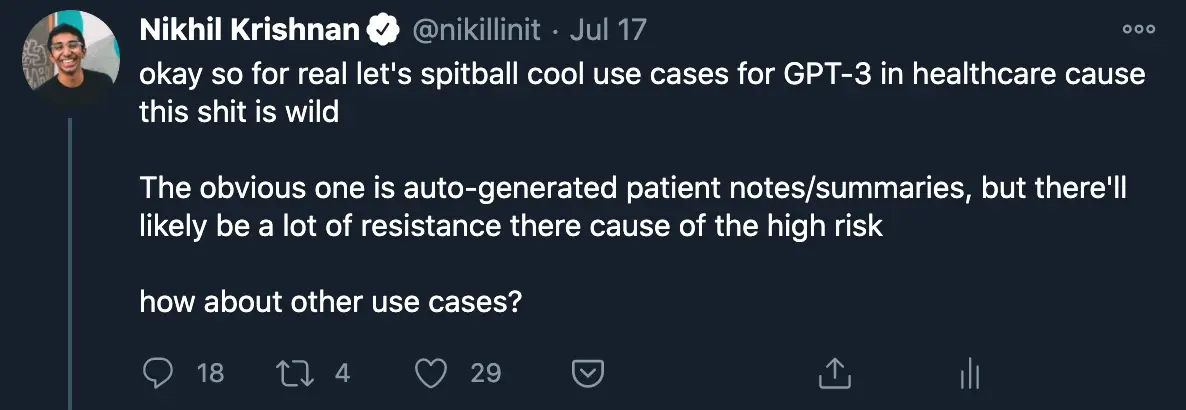

I asked this question on Twitter and got some good responses that I’m summarizing + adding in my own.

- A new WebMD - Let’s say a patient is diagnosed with something, and want to understand more about their disease or the research that’s currently out there. Google and WebMD will only get you so far, but taking a bunch of sources across the web and summarizing them in an easy to access place for patients would be clutch.

Or honestly just deciphering healthcare jargon that each of us constantly have to sift through anytime we deal with a medical bill or prescription. Kind of like a modern investopedia/wikipedia specific to healthcare terms. I actually tested this with the learnbyanyone tool and honestly this is a better explanation of revenue cycle management than anyone who’s tried to explain it to me before. But I still don’t understand it lol.

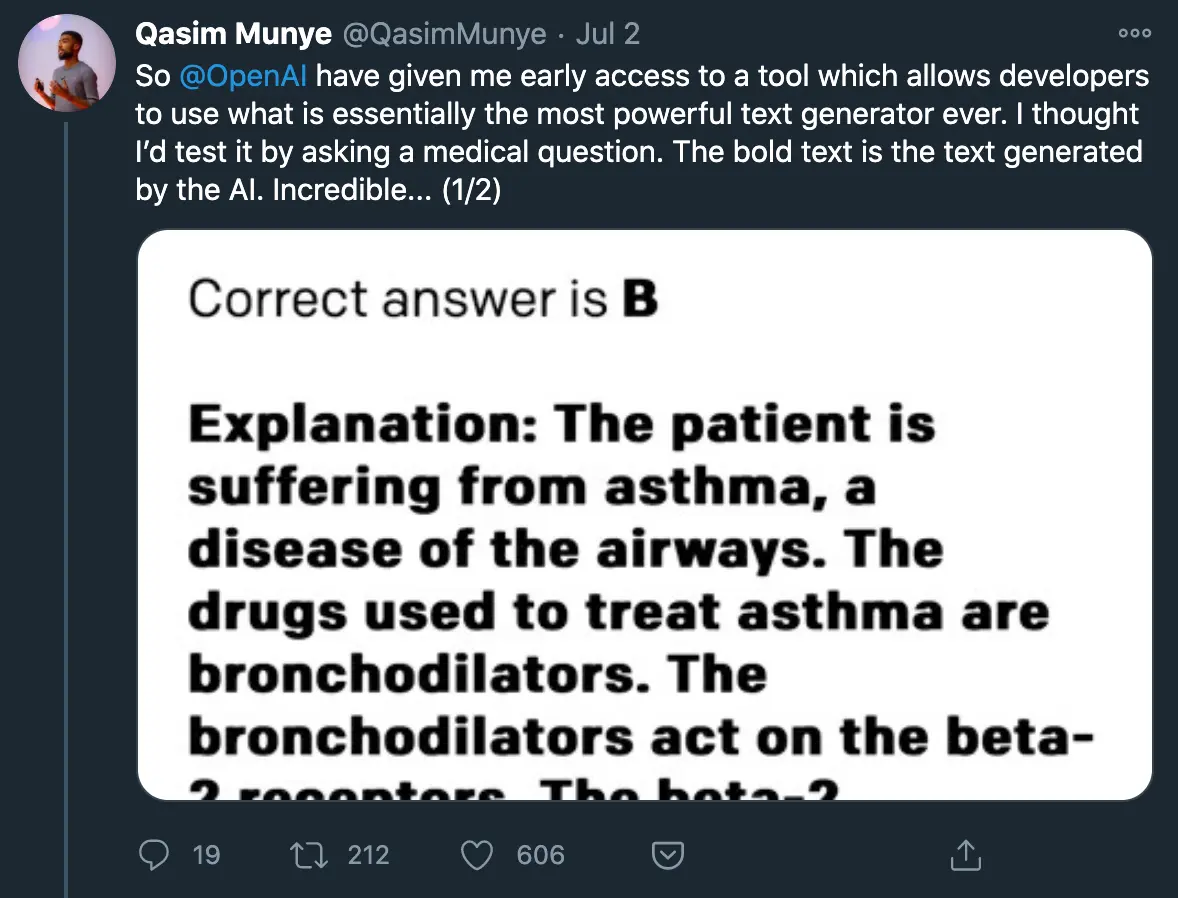

- Clinical Decision Support and a new UpToDate - Look, doctors don’t remember every single thing they learned in med school, shocker. Many of them go to UpToDate to refresh themselves and then figure out what to do next based on the specific patient inputs. Clinical decision support tools that can give plain text explanations + what the evidence-based protocol usually is for a given disease/issue, and the physician can use some of the specific inputs (demographic, history, etc.) for a written output. Some of the GPT-3 demos show how GPT-3 can spit out explanations based on different levels of reading comprehension, maybe you can do the same around different levels of familiarity with the issue (e.g. specialist, non-specialist, etc.).

- Documentation - Physicians have to write a lot, it’s one of the reasons there’s so much physician burnout. In many cases physicians will copy and paste a previous note over and use other electronic shortcuts to save time, which will sometimes bring outdated information into the new note. GPT-3 could potentially make this process simpler by generating a new note based on a smaller set of inputs that a physician could review and greenlight to keep the note relevant and avoid the errors that come with copying.

There are probably lots of places this could be applied, like discharge notes for example. Physicians already create “dot phrases”, which are basically commands to autofill text. These are really simplistic, imagine smarter auto-generated ones powered by GPT-3. That would be super powerful

- Journal Writing - Considering most journal writing sounds like a robot wrote it anyway, this shouldn’t be too difficult. Honestly the only part that really needs to be written subjectively is the discussion section - past that it’s basically just descriptions of what happened or summaries of the findings. I bet you could use GPT-3 to output something that's like 80% of the way there.

Or honestly it would be great to turn journals/research papers generally into summaries that were understandable for regular patients (…and healthcare analysts). Sometimes it’s hard to figure out exactly what you’re looking at, and making science more accessible to non-scientists should be something we strive towards.

- Test Prep for Med Students - Honestly if there’s anything GPT-3 is good at, it’s creating relatively reasonable scenarios from a small amounts of input data. Being able to generate questions or find explanations to answers would probably be a useful tool for students prepping for the MCAT, Step 1, Step 2, etc. Also a similar scenario I mentioned above where the explanation given can be more tailored to your level of expertise with the subject matter.

- No-code tools: One of the cooler applications to see is GPT-3 parsing out a sentence and spitting out functional web apps, balance sheets, SQL queries, etc. without any coding or accounting knowledge necessary.

I wonder if there was a way to combine GPT-3 with some of the existing robotic process automation (RPA) tools to make it easier for physicians, admin, etc. to build their own automated workflows. A separate topic for another day, but RPA essentially lets a computer mimic your keystrokes so you can automate parts of your job. But building those flows aren’t super simple and they break/update all the time. Below is the UiPath interface where you need to string together commands through a flowchart to do this. What if you could just type out what you wanted to do, and this flow would build itself?

Conclusion/Parting Thoughts

Once again, I’m speaking way out of my comfort zone. But it was hard not get excited seeing all of these amazing prototypes get built for such a wide variety of use cases.

From talking to a few healthcare friends playing around with the tool, what’s impressive about it is how generalizable it is and how little you actually have to train it/prime it to do what you want. That alone makes it pretty cool.

I think what would make this really useful is:

- Showing the confidence with which GPT-3 is giving the output for each sentence. This can make sure that whoever is reviewing can really hone in on those areas.

- Being able to give the model new data and weight it higher to those data sources while maintaining the same parameters. Currently the model is trained on some mix of pre-processed data from the web (see below). Being able to add some pre-processed healthcare data, weight it, and re-train it through the same model would likely make it even more usable in healthcare contexts (though getting a large, usable, clean healthcare training data for something like this is probably stupid hard and retraining is probably very expensive).

- Understand how this is going to be able to handle patient data. I assume right now this is off the table, but understanding how this might be possible in the future will be key to its usability in healthcare.

- Also…how much is this going to cost? It feels like using this is going to be really expensive, what would the economics of an actual business running on this look like?

The fact that we’re at a place where we now have an API to build on top of a generalizable model this powerful that can also be trained on seemingly few pieces of information necessary to prime it feels like strikes a blow to the idea that access to large datasets or trained algorithms is defensible. In fact, it seems more and more likely that data/models are less defensible over time, while the new workflows that it enables or services that are bundled with it matter much more.

If you’re working on or thinking about other use cases for GPT-3, let me know! This feels important and I’m trying to learn more.

Thinkboi out,

Nikhil aka. “a meatsack of training data”

Twitter: @nikillinit

Thanks to Shashin Chokshi, Matthew Schwartz, and Henry Li for reading drafts of this and spitballing ideas

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.