Machine Vision, Robots, and Endoscopes with Matt Schwartz

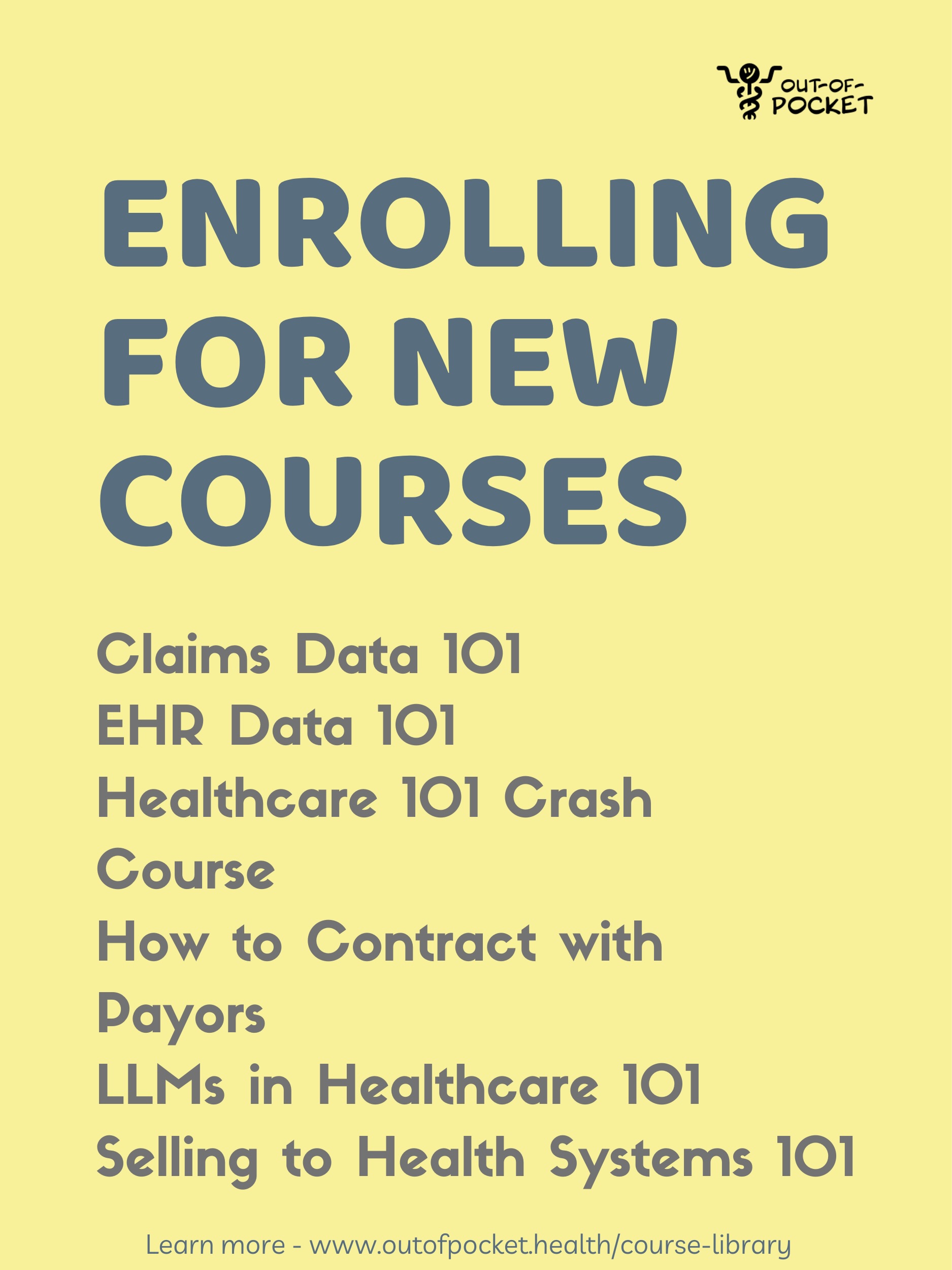

Get Out-Of-Pocket in your email

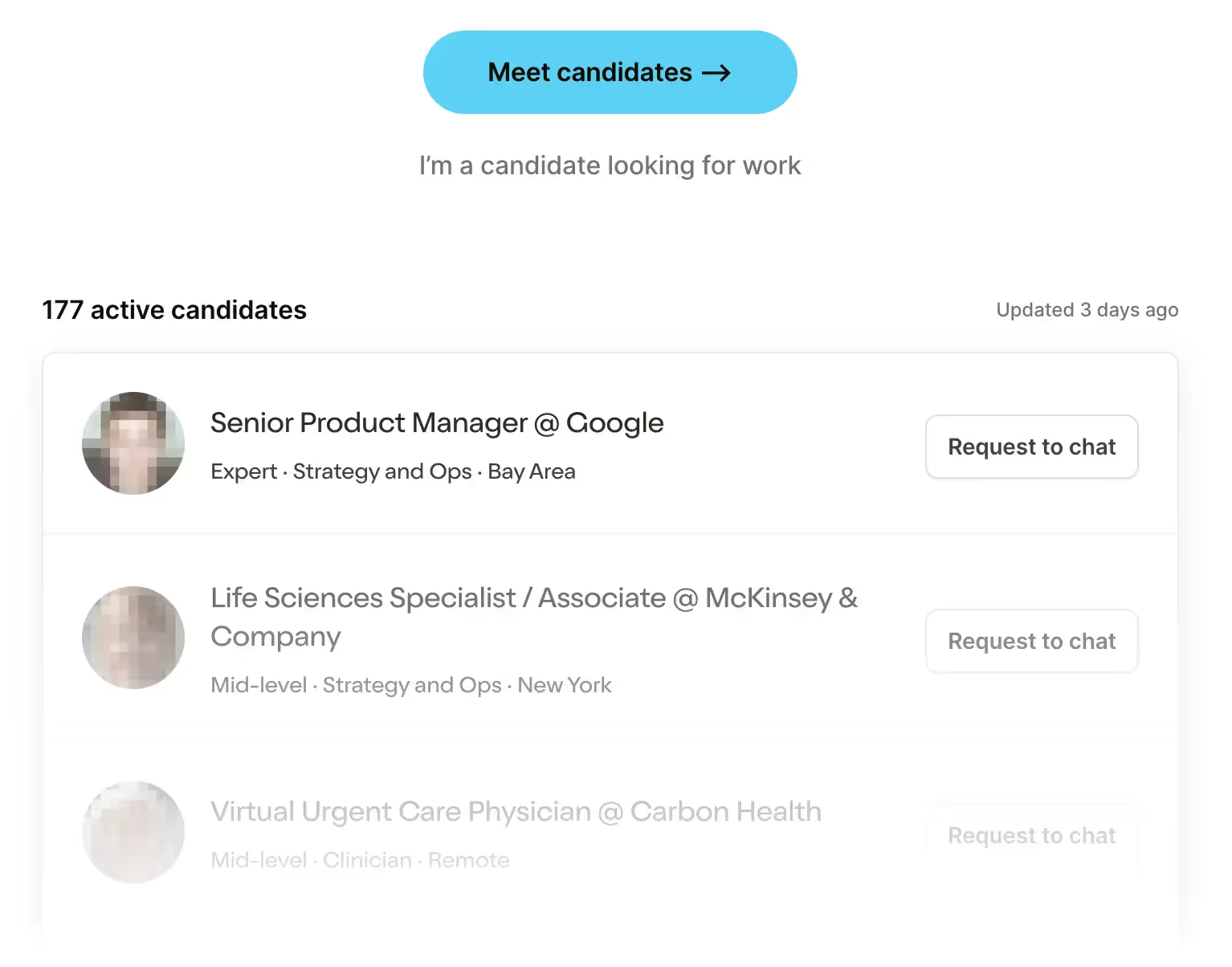

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveHealthcare 101 Crash Course

%2520(1).gif)

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

Today I’m interviewing Matt Schwartz, the co-founder and CEO of Virgo. Despite being a Cancer myself, we had a good interview.

We discuss:

- Some of the tricky challenges with building surgical robots

- Using AI to change clinical workflows in gastroenterology

- Advances in the machine vision space and what they’re enabling

- How to think about going software only vs. software + hardware

- Data annotation for machine learning and model decay

1) What's your background, current role, and the latest cool healthcare project you worked on?

I did my undergrad at Vanderbilt University. I went there planning to study economics, but after freshman year I interned at a minimally invasive spine surgery device company. I fell in love with medical technology that Summer, changed my major to biomedical engineering, and that set me on a course for a career in healthcare.

After graduating, I worked for 5 years as a product manager at that same spine device company — called NuVasive. I worked on the company's flagship procedure XLIF, which stands for eXtreme Lateral Interbody Fusion. Basically instead of doing spine surgery directly from the back or through the stomach, NuVasive pioneered a minimally invasive approach to the spine through the patient's side.

In my time at NuVasive I helped develop and launch our next generation access system along with some new implants and disposable components. It was really an amazing entry point to working in healthcare that combined direct clinical exposure, business strategy, sales, marketing, and working directly with our engineering teams.

After NuVasive, I spent about a year and a half as a product manager at Intuitive Surgical working on the da Vinci robotic surgery system. At Intuitive I helped support what was the latest system at the time (the da Vinci Xi), launched a wirelessly connected operating table, and spent some time working on next generation robotic platforms. Intuitive's technology feels like it's from the future — I spent a lot of late nights in the office just practicing robotic suturing and running through surgical steps on the simulator. I highly recommend checking out this video, and if you ever see da Vinci being demoed at a hospital or tradeshow, make sure you sit down for a test drive.

I left Intuitive in October 2016 to start Virgo, where I'm co-founder and CEO. Virgo's mission is to improve patient outcomes and clinical workflows in healthcare by developing automation and AI tools for endoscopy. We've started by focusing specifically on gastrointestinal (GI) endoscopy — procedures such as colonoscopy and upper endoscopy.

One of the central challenges in developing AI for these types of procedures has been the lack of viable training data. Even though these procedures are video-based, historically the procedure videos have not been saved as part of the medical record. We recognized that not only was this missing video data critical for AI development, but it was also a major gap in the clinical documentation that could help solve a number of other problems. So, in 2018 we launched the first cloud video capture, management, and AI analysis platform for GI endoscopy.

2) At Intuitive, you got to work with some of the Da Vinci robots. What were some of the cool challenges you faced when building a robotically integrated operating table?

For starters, I think operating tables are under appreciated as medical technology. A huge part of successful surgery is getting the right patient positioning, and having the right operating table plays a very important role.

Integrated Table Motion at Intuitive took operating tables to a new level. One of the key value propositions of the da Vinci Xi was its ability to gain nearly complete access to all four quadrants of a patient's abdomen, whereas previous generations could only access one or two during a procedure. However, in order to truly gain good exposure to the entire abdomen, patients would need to be repositioned from Trendelenburg position (head down) to reverse Trendelenburg (head up). However, with a static operating table, this would require completely undocking the robot from the patient, then adjusting the table, then redocking the patient — a process that could easily take five minutes or longer and really interrupt the flow of a procedure.

Integrated Table Motion wirelessly connects the da Vinci robot to the operating table, allowing the two systems to move synchronously. This animation gives a great overview of the technology, and here's the technology in action (warning: surgical footage). You can see in the second video that when the patient is shifted from head up to head down, the small bowel gently shifts out of the way to expose the colon.

I'm sure there were countless cool challenges that the super smart engineers at Intuitive had to solve to get table motion working properly. However, my favorite challenge was thinking through the clinical applications for table motion. All of a sudden, we had new expanded capabilities for this already powerful surgical tool. It was a really thought provoking exercise to think through where these capabilities could add the most value clinically.

Eventually, we set our focus on improving the clinical workflow in colectomy procedures —removal of all or part of the large intestine, generally for cancer or inflammatory bowel disease. These procedures demanded multi-quadrant access throughout the patient's abdomen, which was enabled by Integrated Table Motion. From there, I got to work together with our clinical engineers and expert robotic surgeons to help define the optimal steps to set up the robot for these procedures.

3) So at Virgo, your software helps gastroenterologists when they're doing endoscopies. You mention that this is largely a workflow problem - can you walk me what Virgo's workflow looks like and how you came up with that flow?

We are very focused on clinical workflow at Virgo. Surgical and endoscopy suites are well-oiled machines, and when I go observe procedures, the environment evokes images of a beehive. Everyone is constantly moving — doing one or multiple jobs in this choreographed dance that the entire staff seems to just somehow know. When you spend time in this environment, you realize that even slight deviations to the expected clinical workflow can potentially throw off the entire routine and ultimately even effect patient care.

We didn't invent video recording for endoscopy at Virgo. Doctors have been able to record videos of their procedures since at least VCR's. However, prior to Virgo, capturing endoscopy video has required a significant deviation from the normal clinical workflow. Even modern video capture systems require doctors or other staff members to set up DVD's or external hard drives, adjust the video format, and crucially, remember to actually start and stop video recording. This requires a significant adjustment to the normal clinical workflow, so as a result, it rarely if ever actually happens. Then even in the cases where it does happen, doctors are left with DVD's and hard drives full of videos that are nearly impossible to make use of.

Virgo gives doctors all the benefits of having a properly archived library of procedure videos without imposing any changes on their normal procedural workflow. We accomplish this through a combination of hardware and software. The Virgo platform starts with a small device — similar in form factor to an AppleTV — that connects to the video output of any existing endoscopy system. This device runs patented machine learning algorithms that automatically detect when to start and stop the video recording. Videos are then encrypted and securely transferred to cloud storage where they are further processed for highlight analysis. Doctors access their video library using Virgo's web portal.

[Product demo here, but warning it’s got icky surgical stuff]

The magic is that all of this happens without the doctor changing anything about their workflow. The end result is that instead of 1 in 1,000 procedures getting recorded, we help doctors record every single one of their procedures. Not only does this automation help doctors, it has also helped us capture over 90,000 procedure videos to date.

4) We talked previously about imaging data and why it's now such an interesting time to build in the machine vision space. Why is imaging such a hot area right now for healthcare companies and what are the main challenges that still exist with dealing with image/video data?

I think the major driving force here is all of the advancement happening in the broader machine vision space. Image and video data is particularly well-suited for the new machine learning techniques you frequently hear about — deep learning, convolutional neural networks, recurrent neural networks, etc. Research teams in academia and in industry (Google, Facebook, Microsoft, etc.) are rapidly improving the state-of-the-art in tasks like image classification and object detection. For example, if you look at the gains made on state-of-the-art ImageNet image classification performance, you see that in a little under 10 years we've gone from Top-1/Top-5 accuracy of 50.9%/73.8% to 88.5%/98.7%.

Fortunately, these advances are largely being open sourced, meaning the underlying model architecture and algorithms used to train the models are available for anyone to develop on top of. The only ingredients you need to bring to the table to use these advances are domain expertise and data. This is particularly exciting in healthcare because it's an industry that is full of domain expertise and theoretically full of data.

I emphasize theoretically because not all data is created equal. When it comes to machine learning there are some qualities of data that make it more or less useful. For example, more data is generally better, and more diverse data generally helps ML models generalize to new circumstances. Data that is well-annotated is more useful for supervised machine learning techniques, though there are unsupervised or semi-supervised ML techniques that can take advantage of data that lacks annotations.

More holistically, capturing data intentionally with the understanding that it may be used for ML development often leads to much higher quality data and some assurance that the right data is actually being captured for the problem at hand. This is where a lot of healthcare data falls short — much of it captured haphazardly and without a specific machine learning goal in mind. There is definitely some interesting imaging data (less so video data) available in EHR systems, but it's unreasonable to expect that pre-existing data will definitely be there to help you solve a specific problem.

And I think this is actually where the biggest opportunities exist. If you build something that solves an immediate problem AND intentionally generates data flows for ML development, you will set yourself up for success. There's no shortage of imaging, video, and even audio data in healthcare that either isn't being captured at all or is being captured haphazardly. If you can build a continual data capture flow in this space it will likely lead to a sustainable long-term advantage.

Another key challenge is that, particularly in healthcare, a viable machine learning model definitely does not guarantee a viable product. As you well know, healthcare incentives are weird and often counterintuitive. Sadly, I think a lot of ML innovation that would ultimately help improve patient outcomes will fail to reach widespread adoption because it wasn't built to be a viable product in our healthcare ecosystem. I think this is another reason to concentrate efforts on first building a viable product that intentionally generates data for ML development, rather than building the ML first.

5) You made the conscious decision to be hardware agnostic instead of a hardware+software bundle. What are the pros and cons between the two routes, and why'd you choose to go with yours?

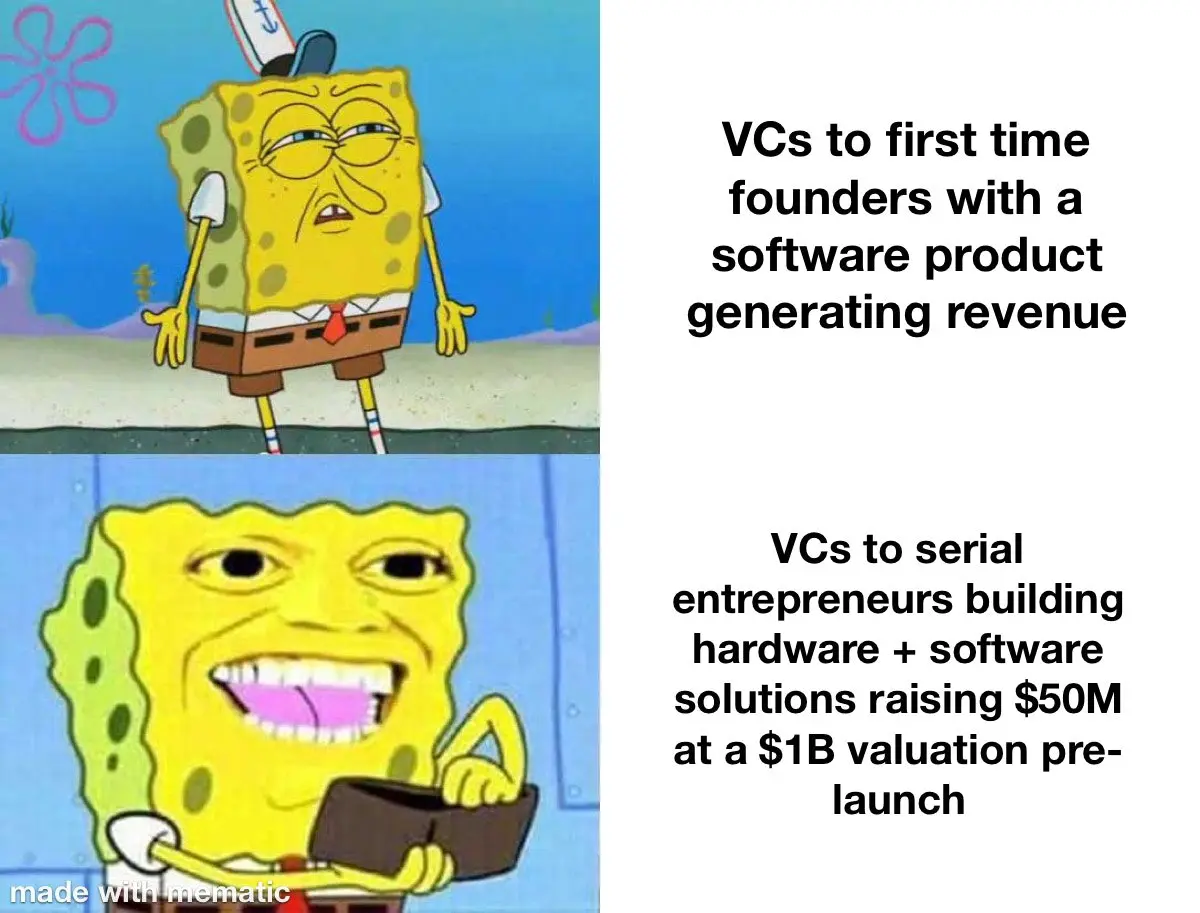

I definitely admire companies that have built fully-integrated hardware+software solutions. When it works there are really strong data flywheel effects.

However, I don't think building dedicated hardware is right for every situation. In my mind, the decision making process should include the following factors:

- Market dynamics

- Difficulty of underlying technology

- Benefits of tech-enabled hardware

- Access to capital

Market dynamics

By this I mean it's important to understand the landscape of the hardware systems you're seeking to displace. Is the market dominated by a single large player or fragmented? What's the typical sales cycle for your category and how frequently do providers buy new systems? What's the current price point, and where do you think you could come in?

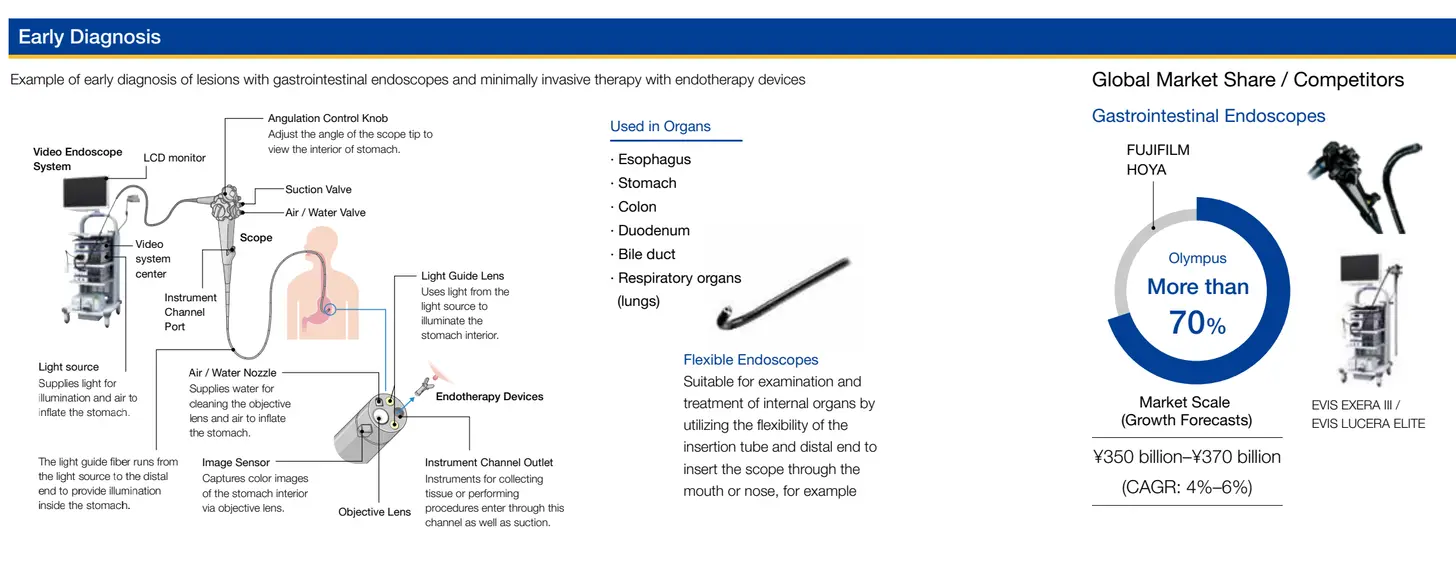

For Virgo, we looked at the GI endoscopy marketplace and saw a US market dominated by a single player — Olympus. The sales cycle for endoscopy equipment can be a multi-year endeavor, with hospitals buying new equipment every 5+ years and endoscopy centers often holding onto their equipment even longer. A new endoscopy video processing tower is priced in the low hundreds of thousands of dollars, and the actual endoscopes are priced in the high tens of thousands of dollars.

One major goal with Virgo was to capture an ever increasing share of endoscopy video data. We judged these market dynamics to be fairly hostile to a new endoscopy upstart capturing significant market share in a reasonable period of time. In fact, several companies have attempted to displace Olympus with new scope technology, and none have succeeded.

Difficulty of underlying technology

In general, hardware systems with "easier" underlying tech are more amenable to a build-your-own strategy. As the tech gets harder, there is simply more risk in getting the product to market. You might be able to build the best software layer for an endoscopy system, but if you can't build a proper endoscope, your system won't stand a chance.

On this dimension, we also judged endoscopy to be a very challenging space. There is actually an incredible amount of engineering know-how that goes into an endoscope. A flexible endoscope involves steerable components, fiber optics, imaging sensors, and working channels for irrigation, suction, and instrumentation. All of this must fit into a tube that's about 10mm in diameter. To make matters worse, modern endoscopes are reusable, meaning they must be able to tolerate extreme temperature, pressure, and chemical exposure associated with sterilization processes.

Benefits of tech-enabled hardware

This is somewhat obvious, but another important factor is whether or not your tech-enabled solution will actually be sufficient for adoption of your solution. Again in endoscopy, there have been technically enhanced solutions that failed to gain significant market traction.

Here Butterfly Network is an instructive comparison. Butterfly is an amazing company that I very much admire. They've developed a handheld ultrasound system that is compatible with mobile devices and has a serious AI layer to help aid in the actually scanning and diagnostic process. I think it's clear that they have executed the build-your-own strategy very effectively and are now being rewarded for it.

One of the key advantages for Butterfly is that their tech enabled solution is actually opening up entire new market segments for point-of-care ultrasound. Previously, POC ultrasound systems would typically be wheeled from room to room in a hospital when needed. Each hospital would have a limited number of these systems and prioritize their utilization.

Butterfly greatly expanded the market for POC ultrasound by making it handheld and adding in that AI layer. Now, nearly any doctor who may benefit from having a POC ultrasound in their day-to-day can simply carry one around. And the AI makes it easier for them to use it directly rather than require a sonographer. The whole universe for how POC ultrasound is utilized is now growing, and medical students are receiving Butterfly ultrasound systems in the same way they used to receive a stethoscope.

With Virgo, we didn't see a great analogy to endoscopy. Procedures like colonoscopy, endoscopic retrograde cholangiopancreatography (ERCP), and endoscopic ultrasound (EUS) are more "serious" procedures than POC ultrasound. They take place in dedicated endoscopy suites, and the majority of them are performed under general anesthesia. We didn't see a likely future where an AI-enabled endoscope would open up an entire new market segment for GI endoscopy, at least in the relatively near-term.

Access to capital

Ultimately, the above factors will give an entrepreneur some sense of the difficulty and time scale required to build their own hardware system, iterate to satisfaction, gain regulatory clearance/approval, and actually penetrate the market. This should provide an idea of the capital resources necessary to do all of this successfully.

With that number in mind, they can determine whether they will have access to sufficient capital. If you look at Butterfly Network's fundraising history, their first round was $100M in 2014. Butterfly was founded by Jonathan Rothberg. Take a look at Jonathan's Wikipedia page, and you'll see that he has an incredibly impressive background. It's not surprising that he was able to come out of the gates raising $100M to build an ultrasound company from the ground up.

I think my co-founders and I are pretty cool, but we are not quite Jonathan Rothberg level cool…yet, at least! We're all first-time founders, and it wasn't likely that we would be able to raise tens of millions of dollars for Virgo right out of the gate. Hopefully this will be on the table for our next go-around!

Summary

With all of that in mind, we chose not to build our own endoscopy systems for Virgo and instead built a system that would be compatible with any existing endoscopy equipment. We felt this would give us the best chance to fulfill our vision for the company within our capital fundraising constraints.

At the same time, we didn't abandon hardware altogether. Our capture device gives us some of the advantages of building the full endoscopy system with much less complexity. There is definitely something to be said for having a hardware footprint in a procedure room and the future opportunities that affords. We also have much more control over our data ingestion.

I think this decision is best viewed as a spectrum rather than a binary. On one end of the spectrum you have Butterfly Network building ground up ultrasound devices, on the other you have a swath of pure software digital health companies, and Virgo sits somewhere in the middle.

6) You talked a bit about having data that's "well-annotated", and it's a term that comes up quite a bit in reference to training data. What does annotation actually look like, and what makes it "good"?

The concept of "well-annotated" data is both interesting and evolving. Many machine learning techniques for image/video data involve supervised learning. This means you train the ML model using data that has been explicitly labeled by a human (or humans) — for example, a set of images with classification labels. From this labeled data, a supervised learning model can be trained to predict output labels for new input data.

I believe in order for annotations to be "good" the most important thing is that they are actually relevant to the task at hand. If you have a dataset of pathology slides that are annotated with cancer type, those annotations won't necessarily help you train a model to predict cancer stage. This is a frequent stumbling block in healthcare where there is a desire to utilize datasets retrospectively.

Supervised learning can be very useful, but the challenge of course is acquiring accurate annotations. Particularly with large datasets (looking at you, video) the process of adding annotations to raw input data can be extremely time-consuming and costly. At the ground level, this involves using specialized tools to manually review images/videos and add labels. This involves significant human capital and potentially paying high-cost experts if expert-level annotations are desired.

This is the typical state of affairs with ML development; however, there are early signs that this sort of pixel perfect annotation is becoming less critical to solve lots of interesting problems. Semi-supervised and unsupervised machine learning techniques enable ML engineers to creatively stretch their data further.

We employ a form of semi-supervised learning that begins with a subset of well-annotated frames from our video dataset for a particular task. Using this data subset, we will train a classification model. Then we use this model to predict annotations for a larger subset of our total data. From there, a human sorts through the predictions to either validate or correct them. This process has translated to significant time-savings in the annotation process for us.

There are even more promising semi-supervised learning techniques that border on fully unsupervised learning. In November 2019, the Google Brain team and Carnegie Mellon released a paper titled "Self-training with Noisy Student improves ImageNet classification". In this paper, the teams utilize annotated image data from ImageNet in combination with a massive corpus of unlabeled images. Their process involves "teacher" and "student" ML models which are trained to predict labels, then iteratively retrained on the combination of real labels and predicted "pseudo-labels".

This produced state-of-the-art results on top-1 ImageNet accuracy, besting the prior SOTA by 2%. More impressively, this model also dramatically improved robustness on harder datasets. And this was done primarily with unlabeled data.

I believe these semi-supervised learning techniques will continue to see wider adoption going forward. With this in mind, healthcare companies should focus on building systems that produce continuous and controlled data streams. If there are low-cost ways to naturally generate annotations for this data, that's great. If not, the goal should still be to have controlled data that is likely to support your problem solving efforts.

The other focal point for healthcare companies should be building systems that enable rapid testing, iteration, and eventually, deployment, of new models. Even if new models can't be deployed to production without proper regulatory testing, the ability to validate what the performance of new models would be in production will be extremely valuable. Machine learning as an engineering tool is an iterative process. Working with static datasets can produce interesting research results, but I believe building continuous data input/output systems will be the best long-term product strategy.

7) Tech like this theoretically has different business models. Payers might be interested to catch certain cancers earlier, providers might want it for increased polyp removing procedures, etc. How'd you decided on the pharma route and the clinical trial use case?

This is definitely true. The endoscopy video data we are capturing in Virgo is quite unique and can be utilized to solve a number of different problems for different stakeholders.

We are currently focused on using the Virgo platform to help pharma companies — specifically in the inflammatory bowel disease (IBD) space. For some background, IBD is an umbrella term for ulcerative colitis and Crohn's disease. These are debilitating, chronic, inflammatory diseases of the GI tract.

There are an estimated 3 million US adults living with IBD, and 70,000 new cases are diagnosed each year. IBD treatment generally involves dietary changes and pharmaceuticals; however, eventually surgery may be required.

IBD is a key segment for pharma companies, with the total market size expected to reach $21.3 billion by 2026. Unfortunately, current pharmaceutical outcomes are underwhelming. More than 1/3rd of IBD patients fail induction therapy, and up to 60% of patients who do respond to induction therapy then lose effectiveness over time. As such, pharma companies are investing heavily to develop new and improved treatment options.

A few more harrowing statistics, then we'll get to how Virgo helps. IBD clinical trials are notoriously difficult to enroll. Each trial site enrolls an average of just 1 patient per year — the resulting trial delays cost pharma companies as much as $8 million per day.

We decided to focus on this problem because there is clearly a tremendous amount of room for improvement, and the Virgo platform is uniquely positioned to assist. A critical component of IBD care involves endoscopic surveillance. Accordingly, Virgo is able to help pharma companies improve IBD care in three ways:

- Clinical Trial Recruitment - We deploy the Virgo platform across the spectrum of endoscopy settings ranging from major academic hospitals to small endoscopy centers and automatically identify patients who are likely trial candidates based on a combination of endoscopic findings and medical history.

- AI Development and Deployment - Virgo provides pharma companies with tooling to annotate their own endoscopy data and train their own AI models. With those models in hand, we give pharma a ready-made platform to actually deploy their models in a clinical setting.

- Real-world Evidence (RWE) Generation - There is a strong push to leverage RWE for post-market surveillance studies and to potentially even replace or reduce the need for control arms in clinical trials. Virgo provides critical endoscopy video data that is missing from standard endoscopy reporting.

We recently announced our first pharma collaboration with Genentech — an independent subsidiary of Roche. We are incredibly excited about the opportunities for Virgo's technology to help pharma companies advance IBD treatment. Beyond IBD, we see similar opportunities in other therapeutic areas that involve the use of endoscopy such as eosinophilic esophagitis, celiac disease, idiopathic pulmonary fibrosis, and potentially even non-small cell lung cancer.

8) How is something like Virgo regulated? How does regulation of software treat things like model decay, software updates, etc.?

Virgo's cloud endoscopy video platform is what's known as a Medical Device Data System (MDDS). In 2011, the FDA down-classified MDDS from Class III (high-risk) to Class I (low-risk). Then in 2015, the FDA issued further guidance that the agency would not enforce general controls for MDDS based on "the low risk they pose to patients and the importance they play in advancing digital health." This ruling certainly helped Virgo get into market quickly.

That said, we still diligently focus on software development best practices and continual improvements to our platform. Because our devices are connected to the cloud, we capture a steady stream of logging and performance data. We are also able to initiate over-the-air (OTA) software updates. The majority of these updates center around performance enhancements, bug fixes, and security patches; however, we do also track model performance for our automated recording system.

As I mentioned earlier, our capture devices run machine learning models in real time to automatically determine when to start and stop video recording. This enables clinicians to capture their procedure video without any deviation from their normal clinical workflow.

At this point based on the 100,000+ procedures we've captured, this model is highly generalizable, which means it tends to work very well out of the box for new situations. Rarely, we find that an edge case is causing a failure mode. This is most commonly surfaced through a user reporting that an expected procedure didn't get recorded. At that point we will work on retraining an updated model that solves the newly discovered edge case. With the new model in hand, we validate it against an updated validation data set, then we release it as a staged beta to ensure no new issues are introduced before releasing the update to the entire fleet of capture devices.

9) I see Virgo has a patent, and it was a champagne poppin' moment. Are patents actually defensible here? What are you actually patenting in this case?

We're certainly proud of our patents — we actually have two now:

- Automated System for Medical Video Recording and Storage

- Automated Medical Note Generation System Utilizing Text, Audio and Video Data

The first covers Virgo's core platform and our ability to automate the video recording process based on real-time analysis of the actual endoscopy video stream. The second patent isn't something we've productized yet, but it involves using the combination of text, audio, and video data in recurrent neural networks to automatically generate a medical procedure note. For procedures in particular, we believe this combination of multiple data inputs is very important to build a viable note generation system.

While these patents are nice to have and can provide some protection, they certainly aren't the focal point of our defensibility strategy. I've been on the other side of trying to design around patents in my prior work, and with a will (and enough resources) there's usually a way.

Virgo's long-term defensibility centers around the network effects we're building within our platform and our growing data lead. Virgo power users often leverage the platform to conduct multi-center research with their endoscopy video data. As such, the more users are on Virgo, the more powerful the platform becomes for these research efforts. We also see a good amount of general video sharing between physicians.

We believe we have already established Virgo as the leading platform to conduct serious endoscopy video capture in gastroenterology. This is likely to provide more long-term defensibility than any patents could.

Bonus: I hear you're into astrophotography. How similar are the images of stars vs endoscopies?

I'll refrain from making the obvious joke about Uranus….

But there are definitely some similarities between astrophotography and using computer vision for endoscopy. I think of astrophotography as this really pure form of photography where you have to get creative to find success — after all, you're trying to capture a relatively miniscule number of photons from light-years away. So astrophotographers have come up with all of these really interesting computer vision techniques to capture multiple long exposures and stack them over time to pull out more detail than in any given individual photo. At the end of the day, photos of the stars and endoscopy videos are just grids of pixel values, which means they are really well-suited for computer vision analysis. For fun, here are some astrophotos I've taken with an iPhone —some are aided by a telescope of course!

[NK note: there’s another joke to be made about mooning too]

Thinkboi out,

Nikhil aka. “smokin’ on that endo-scope”

Twitter: @nikillinit

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.