Running Tests on Generative AI with Autoblocks

Get Out-Of-Pocket in your email

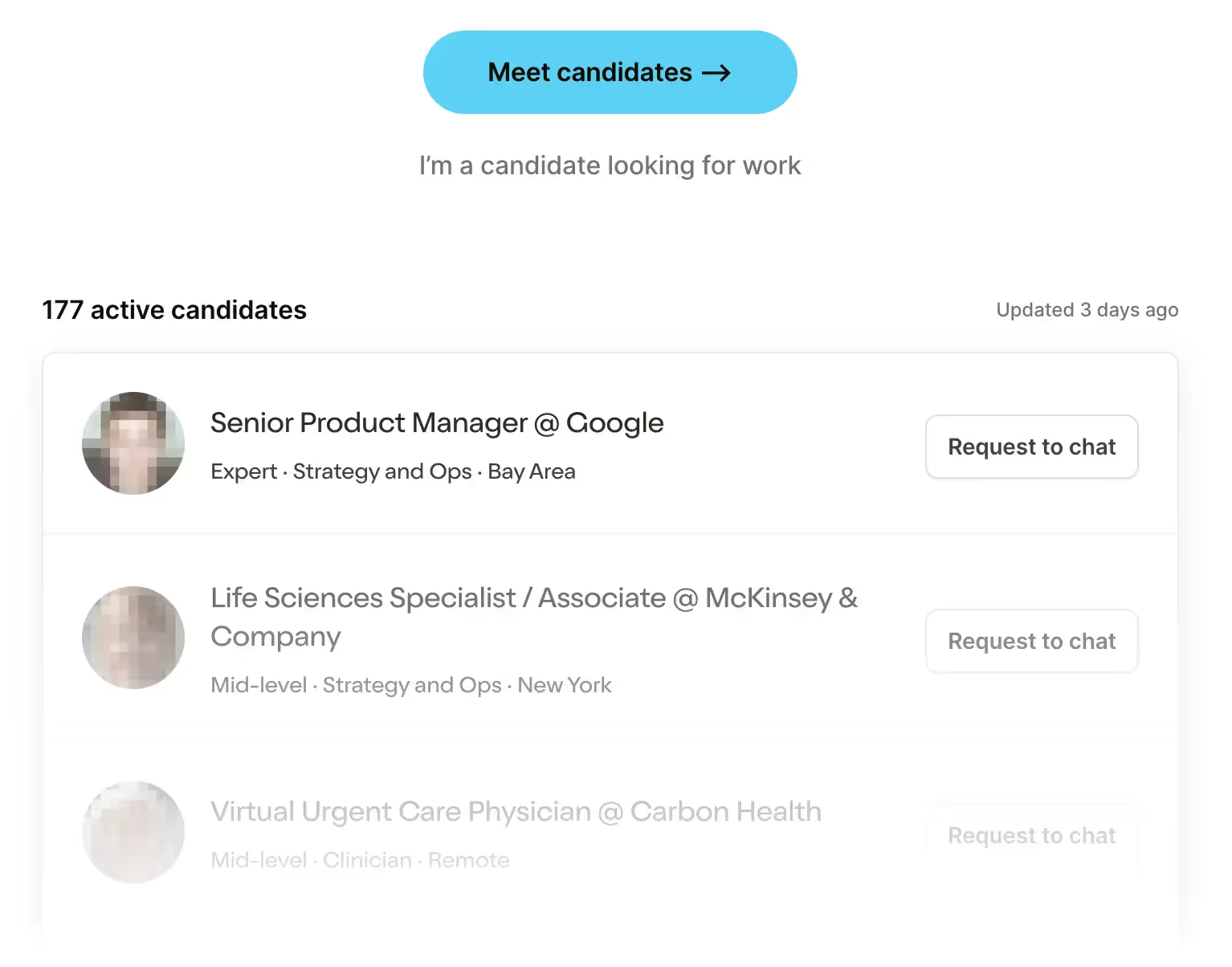

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveClaims Data 101 Course

.gif)

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

TL;DR

Autoblocks makes it easier for companies to test their generative AI applications before they get put in front of users. They create tools that help map your genAI workflows and then set up a bunch of simulated tests + human reviewers to check where you have issues. This helps you catch AI issues before users do.

Their bet is that generative AI in healthcare is going to grow and the fault tolerance in healthcare is way lower, meaning testing is going to be a key part of procurement and product development. Some issues they may face include companies choosing to skip extensive testing/build testing procedures in-house, a steep learning curve for people to learn how to do testing correctly, and genAI potentially being too early.

We go into all of that below.

This is a sponsored post. You can read more about my rules/thoughts on sponsored posts here. If you’re interested in having a sponsored post done, we do four per year. You can inquire here.

_______

Company Name - Autoblocks

Autoblocks is a company that makes it easy for you to run tests on your generative AI applications to make sure they don’t go off the rails and understand why they did.

I wanted to explain them as “Quality Assurance testing for generative AI”. They said that wasn’t sexy enough. They wanted to say “Determinism-as-a-service”. I said we’re not doing that here.

Transformers are a key technical breakthrough that enabled the Large Language Model (LLM) wave we see today. Which is why it’s only fair that they name their company…

AUTOBLOCKS, ROLL OUT.

What’s the Pain Point Being Solved?

A lot of the new generative AI applications are non-deterministic. You can input the same prompt, and it’s not guaranteed you’ll get the same output each time (in fact, it’s very likely to be different). This is different from a lot of traditional software where if one thing goes in, you’ll very reliably get the same output out each time.

This lack of determinism is a double edged sword. On the one hand these genAI applications can handle a much wider array of use cases without needing to constantly update their rules. On the other hand, things can go off the rails in bad ways if a certain edge case scenario or bad actor tries to get the AI to do things it's not supposed to.

For example - an airline chatbot told a customer there was a discount that didn’t exist for their flight. Then the customer bought a ticket and the discount was never applied, so the customer sued the airline. The airline tried to argue the chat was a separate legal entity responsible for its own actions, but lost the case and had to pay the customer.

This is a small scale example, but imagine this kind of off the rails behavior in a patient-facing setting. Like your genAI tool auto-responds to a patient and says “yeah it’s cancer, damn that’s crazy” in response to a cholesterol test. Not great, Bob!

So, now companies are putting more guardrails around their AI or training it on more specific datasets to get the outputs to stay within a certain range of answers. The liability and high risk nature of the interactions in healthcare basically means you need an airtight process of testing these applications before they start actually doing things.

But how do you actually test to make sure it’s chill before releasing it into the real-world?

What does Autoblocks do?

Autoblocks makes it much easier for you to test your GenAI applications in a bunch of different ways. They want to make it easier for companies to understand how changes to their systems will change the outputs of their applications in a way that’s traceable. They want to make your test environment and your real environment as similar as possible so you catch AI issues before your users do.

There are a few different components for how this works.

First, we need to figure out WHAT needs to be tested based on the type of application you have. A voice AI application needs to be tested differently than an AI scribe or automated patient messages. Even between two AI scribes, they might have different settings or user bases and expect to see different inputs/outputs.

You can connect Autoblocks to your codebase or build a new AI app from scratch in their workflow builder. At the end, you’ll have a visual representation of the different components of your application, their inputs, and their outputs.

The visualization and testing tools are all usable by technical or non-technical friends, so you can collaborate together on everything. Test engineers are unanimously voted as “most likely to kill the vibe”. And now we can share that burden.

From here you need to start building out tests! There’s different kinds of testing.

- You can create a bunch of different test prompts that run through your text fields.

- You can create simulated “personas” that your own agents interact with and see what the interaction looks like. These can be voice or text agents. You can give them personalities like ”Dr. Octopus in an inpatient setting” or “Karen”.

- You can do something called Vin Dieseling. This is where Autoblocks purposely tries to break your system, gets identifiable information, and becomes a bad actor.

- You can also create tests that require an expert to actually review the output and grade it. Autoblocks contracts these reviews out to third-party graders with medical expertise as well, or can integrate your own in-house experts into the review.

To make these tests, you can upload the ones you already run to check your applications (e.g. prompts you give to see the outputs). Autoblocks also has a bunch of out-of-the-box standard tests that you can use based on the kinds of flows you built, and then you can customize.

Running the test is one aspect, but you can also create different types of evaluations you want your applications to be particularly good in. For example, you might want one type of evaluation that tests for accuracy, another that tests for consistency, and a different one that tests for bias. You can create different evaluators either in code or as a LLM prompt, and it’ll evaluate the outputs based on those dimensions.

At this point, you have the different types of tests + evaluators created, you can start running a bunch of tests against your applications and it’ll show you how it performed, where it failed, and how it changed over multiple runs if you made changes in between them. You can run multiple hundreds of tests in seconds vs. manual verification. This is bot-on-bot violence, and we are choosing it.

And with that, you’ve basically put your apps through a bunch of different types of tests, created a traceability log of what went wrong, and evaluated them against different dimensions you care about. Autoblocks will also show you where it thinks you should be adding more test coverage and make suggestions on missing tests. Maybe this is the universe’s sign that you should risk it all 😏.

What Is The Business Model And Who Is The End User?

Autoblocks charges a subscription fee to companies that use it. It’s a company mitigating risk…obviously they’re gonna pick the least risky pricing model.

The users are typically engineers and product teams at healthcare companies deploying external facing AI applications (e.g. to patients, clinicians, voice agents to call companies, etc.). Or, people that just really like antagonizing AI I guess.

Job Openings

Autoblock is currently hiring:

Out-Of-Pocket Take

A few things I like about Autoblocks:

Public facing testing - One neat thing Autoblocks is trying to do is create a public facing “Trust Center”. Basically, it lets companies show externally what their testing process looks like, how well they’ve been performing on their tests, and how companies compare in processes/performance against each other. You see an example here.

It’s smart because it’s a way for companies to use testing as a point of marketing, especially in an area like healthcare where accuracy is a #1 concern. And it’s good for the ecosystem who can now more easily see how other companies choose to run their own testing, and model after them.

Testing as a part of product development - At other companies I’ve worked at, testing was done as a “cover your ass” act. We’d build products, then after the fact we’d run tests to make sure nothing broke. Product roadmaps were independent of testing.

When the product outputs are non-deterministic, testing actually becomes a key part of product development. Every tweak you make, you’re trying to see the range of outputs since it’s different every time and there are a million edge case scenarios. This ends up informing changes you need to make in the product itself, and is a very different way of thinking about where testing fits in.

In the coming world of generative AI, this feels like it’s going to become more normal. Autoblocks is building tools that let technical and non-technical people build and review their own tests. This makes testing a more collaborative process and an embedded part of the building process. This is actually the core problem of their case study with Hinge Health that they just put out.

This is especially important in healthcare where understanding where things went wrong might require subject matter expertise, so embedding things like human expert reviews as a built-in part of the evaluation process is smart.

Healthcare as a jumping off point - Healthcare is like New York…if you can make it here, you can make it anywhere. But the rent you gotta pay is crazy. Also there are rats everywhere.

Healthcare probably has the highest risk scenarios and things really cannot go wrong, so it makes sense that Autoblocks is doubling down here. You can imagine that for healthcare procurement of AI solutions, reliable testing before deployment is going to become a requirement.

After healthcare, Autoblocks can be used in lots of other industries that are regulated, like financial services, energy, etc. This makes their potential market size much bigger than just healthcare. I know the VCs reading love to salivate over this topic, specifically.

–

As with all companies, here are a few things Autoblocks might struggle with as it grows:

Competition - I think competition has a few different vectors here. The first is companies not viewing this as a priority enough to pay for an external tool for this, or rely on in-house testing. Pressure to do more formalized testing will most likely have to come from buyers and procurement processes for companies to care enough about this to use a testing suite like Autoblocks.

Another vector of competition is third-party certification organizations that run tests on the company. For example, consortiums like CHAI are trying to build “best practices” for AI development in healthcare. You can see a world in which consortiums that represent the buyers create their own “standard” for acceptable testing. It’s also possible that the certifying organizations set up the rules on what they expect to be tested, and then Autoblocks provides the practical implementation of actually running those tests.

Learning Curve - The pro and con of everyone collaborating on testing is that you need to teach a lot of new behaviors to people. If you’re not a test engineer, you’ve probably never thought about all the different ways your product needs to be tested. Autoblocks’ utility within a company is dependent on everyone “getting it”, which is going to require a lot people investing time in learning how this works.

They do have a lot of things that make it easy to get started. They have starter testing templates you can use and they’ll even deploy an engineer to “red team” your application which sounds sexual but is apparently just looking for flaws (which is sexual to someone). Companies will likely have point people for testing, but what will make this product sticky are the collaborative features which is also a higher lift.

Market Timing - The question on everyone’s mind is whether generative AI is a bubble or if it’s going to take over the world in five years. Autoblocks is building with the assumption that generative AI is here to stay and will continue to grow (and likely is propping up the entirety of San Francisco, currently).

However, if it turns out to be a bubble, then they're as exposed as a child predator on a Chris Hansen show. But I’m sure they have a back up plan…probably becoming a revenue cycle management company.

Conclusion And Parting Thoughts

It’s a little unclear if the entire Out-Of-Pocket ecosystem is now also supported by the generative AI ecosystem, but I’m trying not to think about it too much.

Testing is going to be a key part of this ecosystem if we want AI apps to actually reach their full potential without too much human intervention. Autoblocks is trying to plant their flag as the best-in-class testing solution for companies. Bringing testing from the shadows no one wants to go and into the product development roadmap is a tall order, but it’ll be awesome if they can pull it off.

Thinkboi out,

Nikhil aka. “Autoblock boy JB”

Twitter: @nikillinit

IG: @outofpockethealth

Other posts: outofpocket.health/posts

--

{{sub-form}}

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction and whether your parents believe you have a job.

Interlude - Courses!!!

See All Courses →We have many courses currently enrolling. As always, hit us up for group deals or custom stuff or just to talk cause we’re all lonely on this big blue planet.

Interlude - Courses!!!

See All Courses →We have many courses currently enrolling. As always, hit us up for group deals or custom stuff or just to talk cause we’re all lonely on this big blue planet.