The Engineering Behind Healthcare LLMs with Abridge

Get Out-Of-Pocket in your email

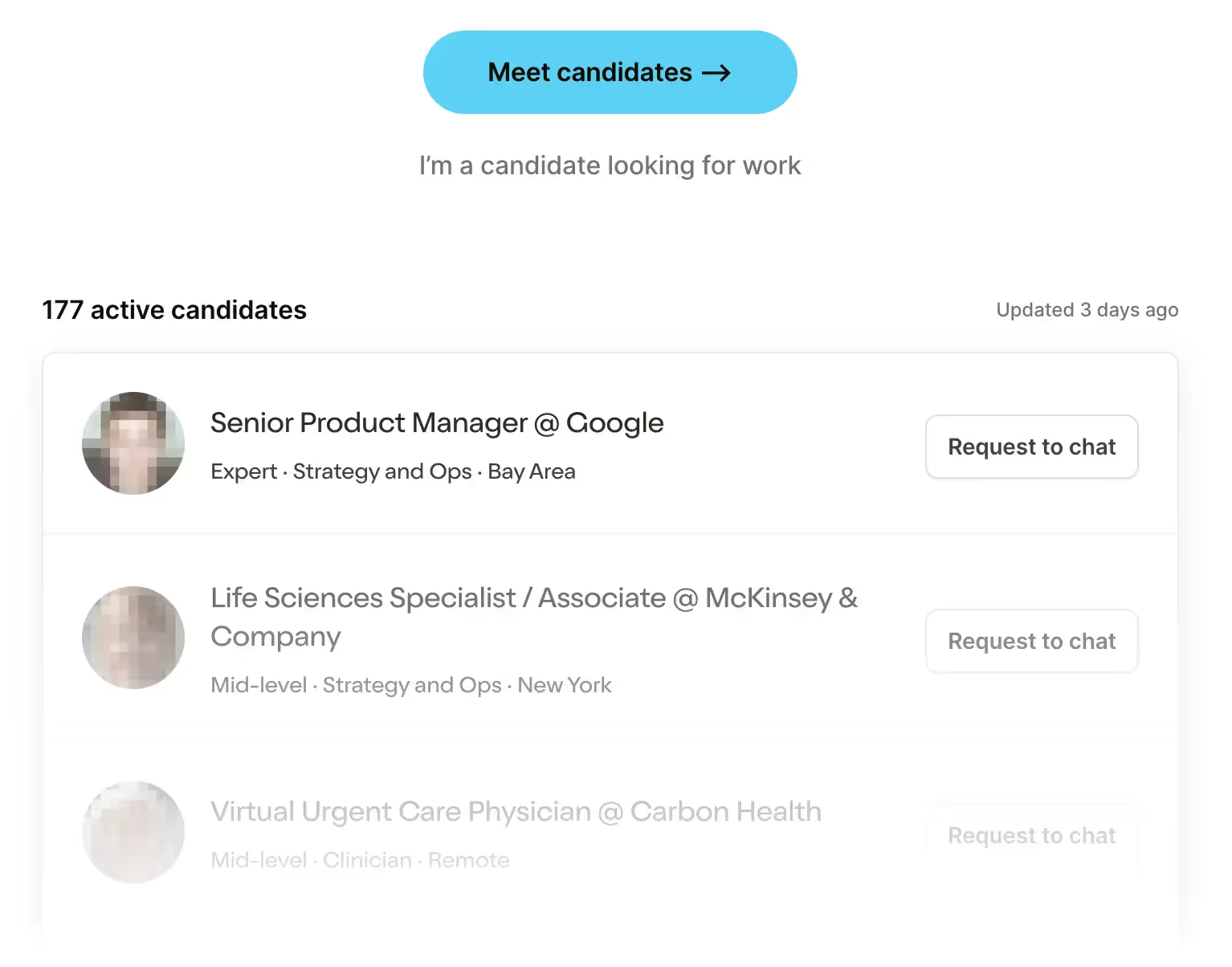

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveHealthcare 101 Crash Course

%2520(1).gif)

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

Today I’m chatting with Davis Liang at Abridge, the company that turns voice encounters in healthcare into reports, data, etc.. We’ve talked in the past about the business and product side of Abridge, but today I wanted to go a bit deeper on the engineering challenges a company building their own healthcare LLMs deals with.

We talk about comparing generalist LLMs vs. healthcare specific ones, how to evaluate model drift, and some of the interesting scenarios like a new drug coming out/multilingual encounters.

This is a sponsored post - you can read more about my rules/thoughts on sponsored posts here. If you’re interested in having a sponsored post done, let us know here.

--

1) Background, current role and latest cool project.

I’ve lived in the bay area almost all my life, moving back after graduate school to work in the tech industry that I grew up alongside. I started my career off as a software engineer at Yahoo working on ranking and recommender systems before taking on roles in machine learning science at Amazon AWS AI and Meta AI. These days, I work at Abridge as a staff machine learning scientist and manager (we’re hiring!).

I’m currently working closely with Epic and Mayo Clinic to develop an ambient solution for nurses to help them focus on the patient care that actually gives them energy and less on administrative tasks. In the last few weeks, we spent time shadowing nurses in various Mayo Clinic locations across the United States. Being elbow-to-elbow with nurses at the front lines of patient care is something new to me and allowed us to see past the model, past the data, and into the experience of patients and nurses. Truly an experience of a lifetime

2) Let’s start with the tougher question because I’m a mean person. What stops some of the more general foundation models from doing what Abridge does?

Large Language Models are undeniably important, but they’re only one small part of a larger system for generating clinical notes that a doctor can safely and efficiently file into the EHR. Fitting into a doctor’s workflow requires some unique characteristics.

A few immediate things come to mind:

- Robustness against various noise conditions like hospital sounds and background chatter, and much more.

- A rigorous human evaluation process and proper evaluation metrics (e.g. tracking transcription quality for novel medications).

- Medically tuned automatic speech recognition (ASR) capabilities – if your transcribed conversations are low-quality, or missing recent medical terminology or medication names, your resulting note will be too.

- Trust building tools. We have a feature called Linked Evidence which allows doctors to highlight parts of the generated SOAP note and we will surface evidence from the transcript.

- Accessibility features like multilingual capabilities beyond existing LLM capabilities to serve the vast populations of non-English speakers in America.

3) Abridge has been around for a while, can you give me some key technological things that happened which made the product today possible?

There was no single point but several step function improvements that developed the technology into what it is today. The first big innovation was the invention of the transformer architecture in 2017, which demonstrated significant improvements in tasks like neural machine translation (NMT).

The era of foundation models like BERT that followed shortly after, pretrained using denoising tasks like masked language modeling (a technique where some words in a sentence are hidden, and the model learns to predict the missing words based on the context of the remaining words) on large datasets such as English Wikipedia and Common Crawl.

BERT-style models became the norm – with just a bit of finetuning with task-specific heads (additional layers added on top of a base model to fine-tune its outputs for specialized tasks), one could expect state-of-the-art capability on question answering, retrieval, named entity recognition, and much, much more.

Around 2018 to 2019, pretrained encoder-decoder and decoder-only models like GPT, T5, and BART were being leveraged in tasks like generative QA, translation, and summarization. These models circumvented the need for “task-specific heads”, making these models significantly more flexible and less cumbersome to finetune and use. As hardware capabilities and stability improved, we started training on even larger models on massive datasets, leveraging increasingly powerful GPU clusters. Additionally, decoder models, which could leverage the Causal Language Modeling task for pretraining, were significantly more sample-efficient at scale.

[NK Note: Imagine needing to know all the acronyms for healthcare and generative AI, I think I’d physically fight someone.]

I think we were all sort of surprised by how capable ChatGPT was when it was first released. It was an inflection point of sorts where the popular opinion shifted around how useful decoder-only generative models could be. Regardless of your qualms about this technology, I think it’s safe to say that the baseline capability of ML models has significantly improved over the last couple of years and they’re becoming more and more feasible in real-world applications. Of course, they’re still not perfect and we have to carefully develop guardrails around these models for more sensitive and high stakes use-cases.

4) You gave a talk at our hackathon about multiple languages actually makes the transcribing problem hard. Can you first describe why it’s a uniquely tough problem?

Even the most capable models today degrade when used for non-English languages, especially low-resource ones. For example, over 1 million individuals speak Haitian Creole in the United States. However, state-of-the-art models like ChatGPT score 40% lower (in terms of accuracy) on simple multi-choice questions in Haitian Creole when compared to English [paper].

Clearly, there’s a lot of room for improvement from the modeling side (e.g. tokenizers and vocabularies built with multilingual capability in mind) but also a data scarcity problem (it’s hard to find clean data for the long tail of languages spoken around the world). Multilingual capability is still an ongoing research challenge and I don’t expect it to be completely solved anytime soon.

[NK note: One interesting thing in the paper is that the models perform better in languages in their native script vs. using english alphabets. But there’s much more training data online in the english alphabet for those given languages]

5) Have you seen any unintended ways people have used the product that are interesting?

We’ve had clinicians using Abridge for inpatient admissions because it worked so well for outpatient visits. In the inpatient setting, patients often have more severe conditions requiring continuous monitoring, multiple interactions, and complex multidisciplinary care. This generally results in conversations that are more frequent, visits more complex, and involve multiple team members, making the documentation process challenging.

We noticed this type of usage and now have intentional pilots that help us to improve performance for those inpatient admissions conversations. We love seeing users take Abridge with them to other settings because it helps us understand where the gaps are and how we can best serve clinicians in their day-to-day.

[NK Note: This technology should be embedded into apartments, record all conversations between couples, and then provide them with the answers to who is correct in all the pettiest arguments. This might be a personal ask.]

6) What’s your favorite generative AI paper and why? You can give one Abridge paper, but you have to give one non-Abridge paper.

In the era of large language models (LLMs), properly evaluating these models continues to be a challenge. For example, these LLMs are potentially trained on an arbitrary portion of public evaluation datasets, making their capabilities hard to quantify. In a recent paper I co-authored, we developed a dataset called Belebele.

In Belebele, human annotators and state-of-the-art models like ChatGPT were given a short passage from Wikipedia and a question about that passage along with 4 possible answers. Humans were able to consistently get a near perfect score with 97.6% accuracy on average on the English test set while ChatGPT struggled, obtaining 87.7% accuracy, despite the contemporaneous fanfare about how GPT-4 was outperforming 90% of individuals trying to pass the bar.

Additionally, papers like FACTScore attempt to bridge the gap between classic metrics like ROUGE or BLEU, metrics that are too rigid for abstractive tasks, and human evaluation, which is expensive and time consuming. The authors showed that breaking a model-generated output into a series of atomic facts, short statements that each contain one piece of information and assigning a binary label to each atomic fact (whether this fact is supported by a reliable information source), allows a fine-grained evaluation of factual precision.

As a part of the larger scientific community, there are many interesting questions that we still haven’t asked (in particular, how do we think about evaluation through the lens of supporting clinical care) and we’re working on asking and answering these questions at Abridge as we speak. Stay tuned for our upcoming papers!

7) How do you all think about model drift and whether a model actually gets worse after being deployed to a hospital? What’s the process for monitoring that and addressing it?

That’s a great question! The world of healthcare is constantly evolving as new medications like Ozempic and diseases like COVID-19 become ubiquitous overnight – as the world changes, we need to be able to monitor how the data drifts and update our models accordingly to capture these changes.

Before deploying any model, we conduct rigorous evaluations, including blinded head-to-head trials, between the new and existing model, with licensed clinicians. Post-deployment, we continuously monitor performance through both quantitative metrics such as edits made to AI-generated notes, star ratings, and qualitative feedback from clinicians. This is a big part of Abridge culture – we read every single piece of clinician feedback, and whether it’s a love letter or constructive criticism, we take it seriously.

8) What’s the org design of an engineering team for a product like this? Are there any stark differences between the design of an engineering team for a gen AI product vs. a regular software/tech product?

AI products, in general, require specialized roles such as research scientists, machine learning engineers, and data engineers. Research scientists develop new features by experimenting with and optimizing machine learning models to perform specific tasks (like note generation). Machine learning engineers can then take these models and deploy them at scale, focusing on aspects like model optimization, serving infrastructure, and integration with existing systems. Data engineers also play a crucial role by building and maintaining the data pipelines that supply the necessary data for training.

As with any software product, platform engineers, UI/UX researchers, mobile developers, and backend software engineers also play critical roles in a generative AI product. On the other hand, a role that’s often overlooked in these conversations are the data annotators who are a crucial part of the development process both for optimization and evaluation of models. This is especially true for highly complex domains like healthcare where data annotators are subject matter experts like licensed doctors, nurses, and researchers.

Bonus Question: What is something interesting that you use large language models for in your personal life?

As an academic researcher, I particularly enjoy attending poster sessions at academic conferences. These sessions are where I get the chance to dive into discussions with authors, ask burning questions, and really explore their ideas in a way that just isn’t possible when I’m reading the paper on my own.

So, sometimes, I’ll actually turn to a large language model after reading a paper, just to see if I can replicate some of that interaction—give it the paper as context, ask it questions, and bounce ideas around. It’s not the same as chatting IRL, but it’s a pretty cool way to contextualize research at home!

[NK note: If any of you do this with Out-Of-Pocket posts I’ll hunt you down.]

Thinkboi out,

Nikhil aka. “Beating himself up over not having a disposition towards statistics as a child”

Twitter: @nikillinit

IG: @outofpockethealth

Other posts: outofpocket.health/posts

--

{{sub-form}}

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction and whether your parents believe you have a job.

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.